We present the first edge-deployed instance of open-source hand gesture recognition to leverage the Coral TPU Accelerator.

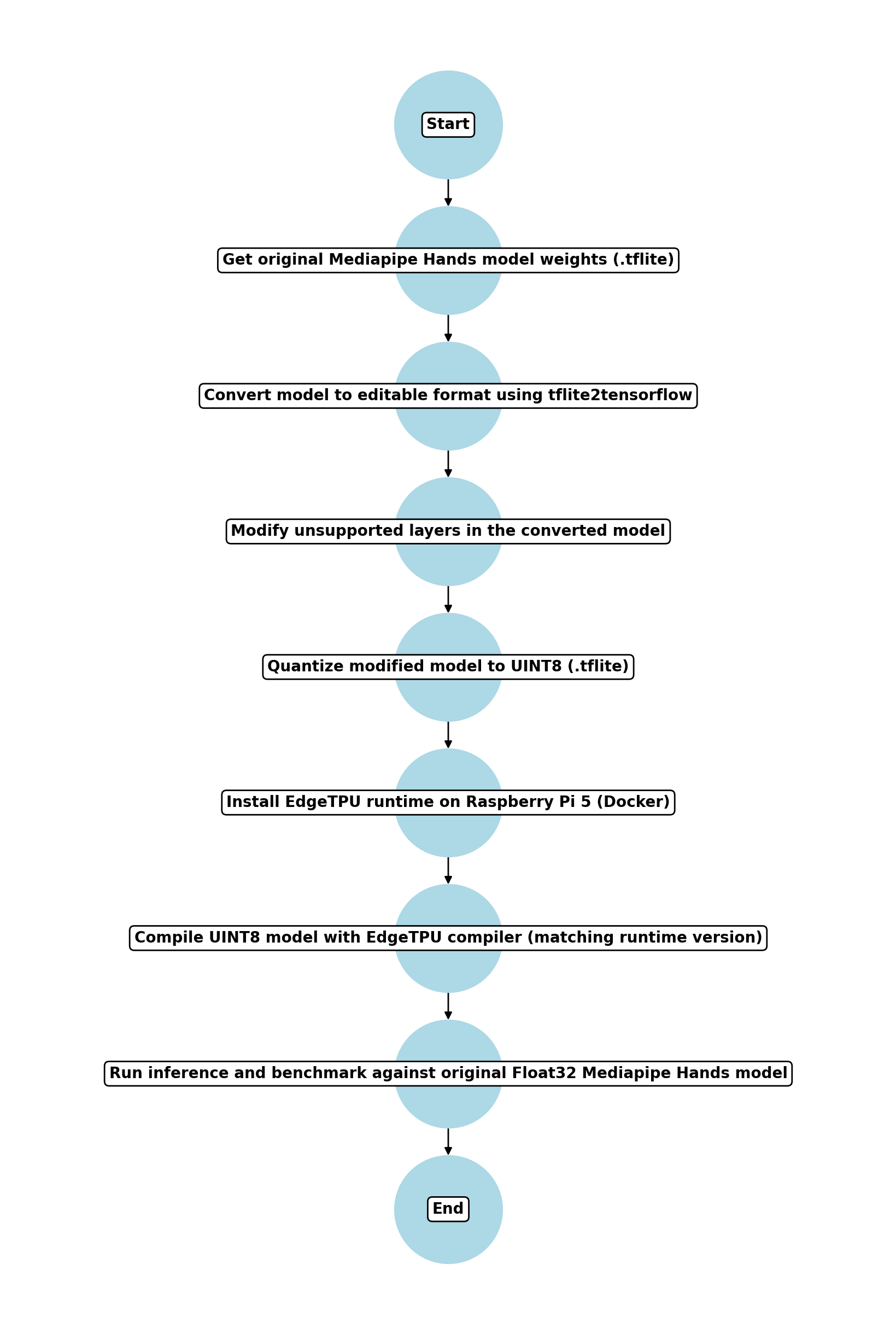

Mediapipe Hands is one of the state of the art Real-Time handtracking/gesture recognition models that are available as open source. However, the Coral TPU Accelerator can only run UINT8 models on a limited set of operations. By adapting the machine learning operations transposed convolution and batch normalization, we are able to quantize and deploy the model on the Coral TPU Accelerator. By offloading machine learning workloads to an edge device with a USB accelerator, we reduce latency, while constraining user data to their edge device.

We further explore hardware designs with a Coral SMD module, that would permit our product to be deployed at a $80 price point with a form-factor smaller than a credit card.

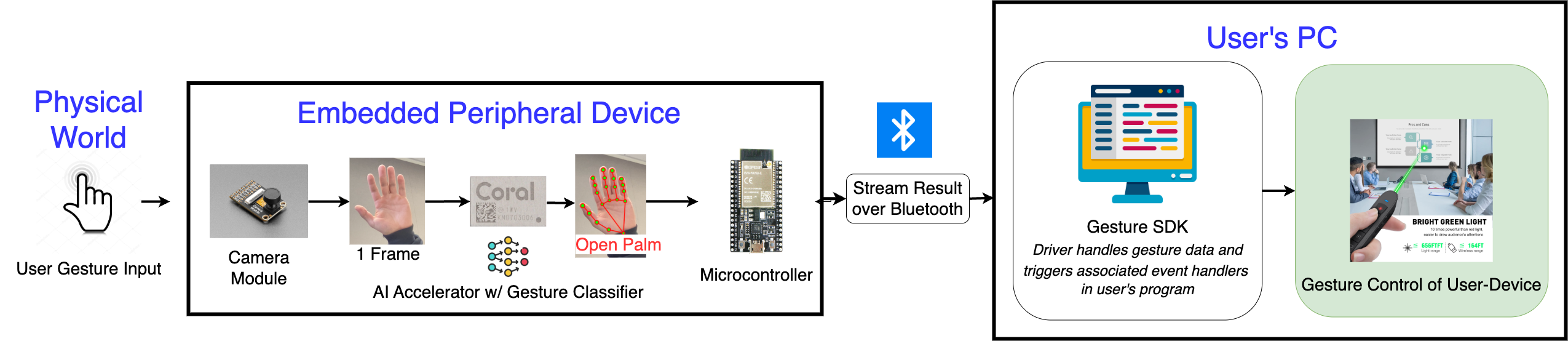

Our work is a step towards making gesture recognition more accessible and affordable for the masses. To support widespread adoption, we introduce an event-based SDK that makes incorporating gesture controls into spatial interfaces straightforward. The rest of our project adopts this SDK to create a collection of demonstrative apps, all of which are solely operated through gestures received over bluetooth on our sdk.

This dataflow describes our process for converting a MediaPipe Gesture Recognition model to run on a Coral TPU Accelerator.

This app demonstrates the capabilities of gestures as a high-level interface for high-throughput interaction. This is a code editor that uses gestures to curate AI suggested actions on their codebase.

We deployed this app for you to try out gesture interaction for yourself.

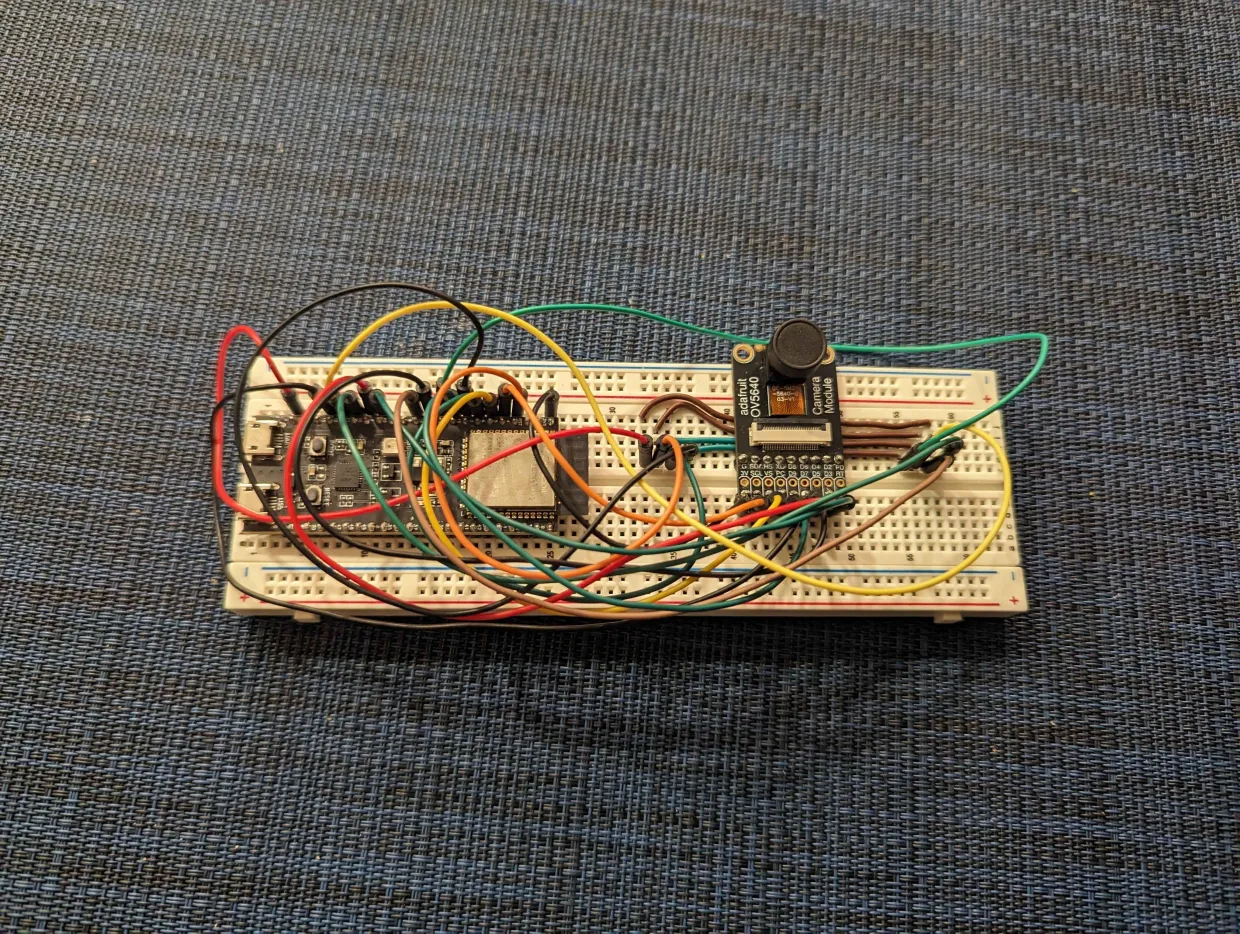

ESP32 with OV5640 camera module.

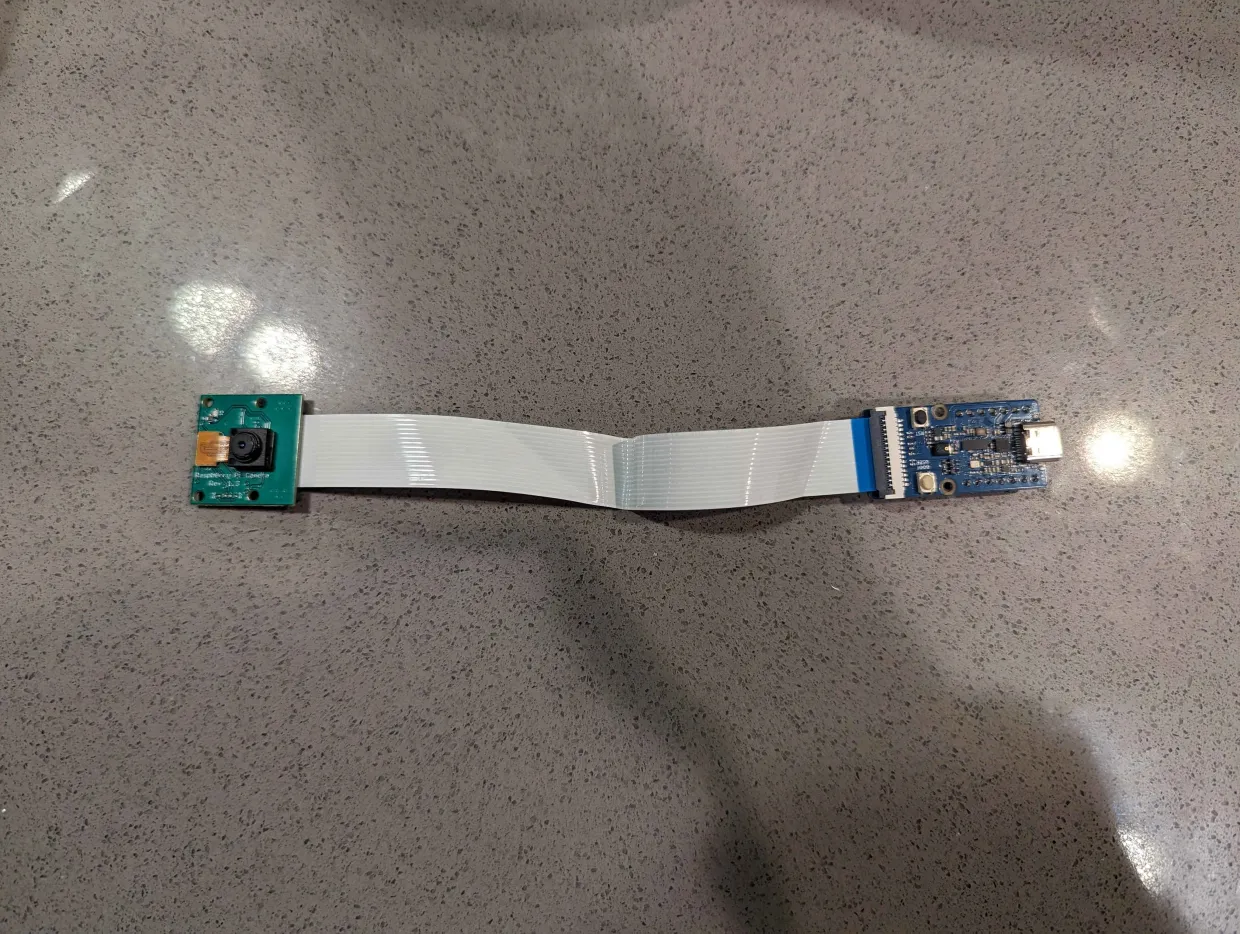

Grove AI chip with Himax WiseEye2 AI processor.

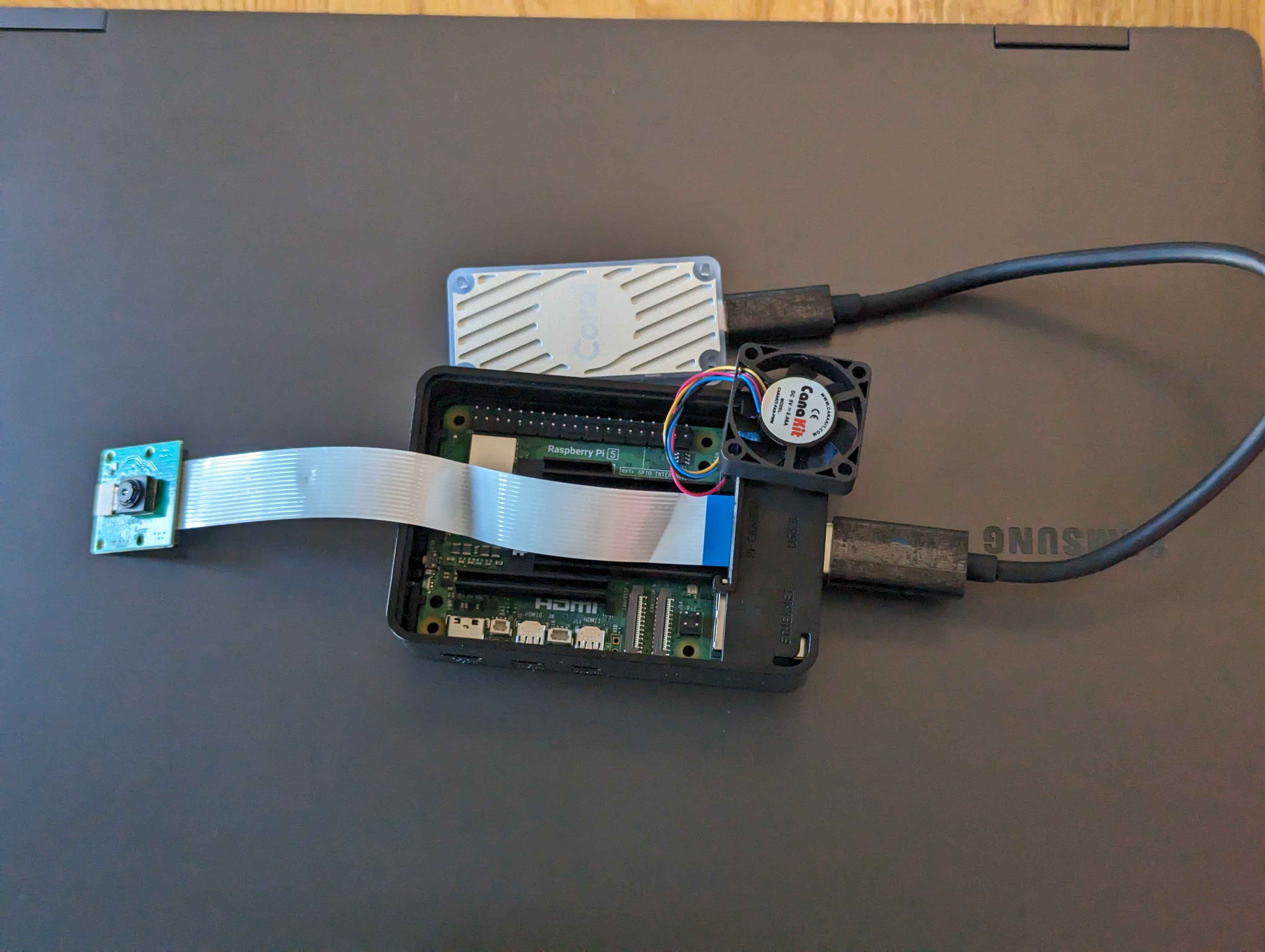

Raspberry Pi 5 with Google Coral USB Accelerator.

Our work is built on top of the Google MediaPipe Gesture Recognition API and the Google Coral Edge TPU Accelerator.